The Rise of the “Prompt Engineer”: Job or Skill?

“A year ago, ‘Prompt Engineer’ sounded like a niche, emerging job. Today, the practice looks more like literacy — a skill most knowledge workers will need.”

A year can be a lifetime in AI. Titles that felt experimental now sound oddly specific. The truth emerging in teams everywhere is simple: prompt engineering isn’t a role reserved for a few specialists — it’s a core, everyday skill. If you write specs, query data, compose emails, draft UX copy, scaffold code, or review security policies with the help of an AI system, you’re already doing prompt engineering.

Writing effective prompts is a lot like writing excellent code comments or documentation. It’s about clarity, structure, and understanding how the system thinks. The twist is that your “comments” are no longer inert — they’re executable instructions to another intelligence that can transform text, code, and images on the fly.

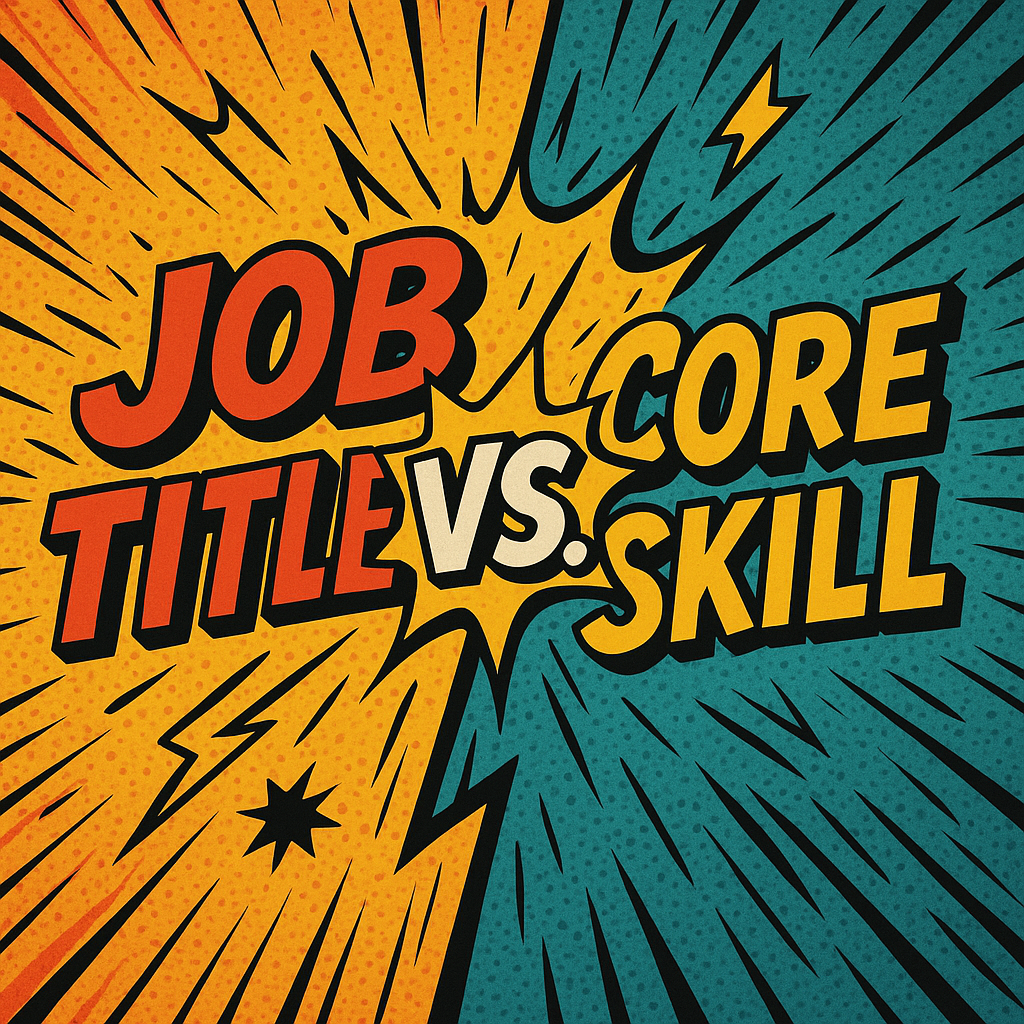

Job Title vs. Core Skill

Will dedicated “Prompt Engineer” roles exist? In the near term, yes — especially inside platform teams, AI product groups, agencies, and enterprises building repeatable AI workflows. Over time, as patterns stabilize and tools improve, the title may fade. The skill will not.

Think “SQL Developer” in the 2000s versus today’s analyst who can comfortably write SQL. The specialization didn’t disappear; it diffused. Prompting is on the same path: a specialty becoming table stakes.

Why It Matters Across Roles

Developers

- Scaffold modules, tests, and migrations with consistent patterns.

- Constrain output to project standards and security policies.

- Use chain-of-thought implicitly via structured steps without exposing secrets.

Designers & UX Writers

- Generate tone-consistent microcopy and empty states.

- Explore alternatives within voice and brand constraints.

- Design dialogue flows for AI features with clarity and safety.

Analysts & PMs

- Translate plain-English questions into structured, auditable prompts.

- Summarize research with citations and guard against hallucinations.

- Prototype specs, test plans, and acceptance criteria faster.

What Prompt Engineering Really Is

At its core, prompt engineering is specification design. You describe context, constraints, and goals in a way that steers the model toward useful behavior while leaving room for creativity where appropriate. Good prompts behave like good interfaces: predictable, composable, and reusable.

A Simple Working Framework

- Role: Define the perspective or expertise the model should adopt.

- Context: Provide inputs, constraints, environment, and examples.

- Task: State what to do, the audience, and the expected outcome.

- Format: Specify the output schema, style, or filetype.

- Checks: Add acceptance criteria, limits, or red-team tests.

Before / After Example

Weak:

Write unit tests for the user service.

Stronger:

Role: Senior Node.js engineer. Context: Jest, TypeScript, userService.ts with createUser(email, password), getUserById(id). Task: Write unit tests with table-driven cases; mock DB calls; cover success, duplicate email, invalid input. Format: A single file userService.spec.ts; no comments; use describe/it. Checks: 90%+ branch coverage; fail if external network calls appear.

The second prompt gives role, context, task, format, and checks — enough structure to yield consistent, reviewable output.

Beyond One-Shot: Multi-Turn Chains and Prompt Debugging

As teams build AI features, prompts evolve from single instructions into small conversations — chains of steps that collect inputs, validate constraints, transform data, and produce a final artifact. This looks a lot like function composition and testing.

A Simple Three-Step Chain

- Normalize inputs (strip PII, validate schema, enforce types).

- Transform (summarize, rewrite, plan, or generate code/doc).

- Constrain (apply style guide, security rules, output schema).

Each step can be a separate prompt, with explicit contracts between them. That makes errors easier to localize and fix.

Prompt Debugging Checklist

- Reduce ambiguity: define audience, tone, and output format.

- Provide minimal but sufficient examples (few-shot).

- Isolate steps: split the task; add validation gates.

- Constrain with schemas: require JSON and validate.

- Add negative tests: include “do not” and edge cases.

- Measure drift: snapshot outputs and diff over time.

Security, Compliance, and Team Standards

The best prompts aren’t long; they’re precise. Precision is not only a quality issue — it’s a safety issue. Well-designed prompts encode team policies and reduce the chance of leaking secrets, inventing facts, or violating brand and legal guidelines.

- Secrets handling: instruct the model never to fabricate keys or tokens; route secrets via tooling, not text.

- Data boundaries: specify allowed sources and forbid external calls unless stubbed.

- Style and brand: include tone, banned phrases, and reading-level constraints.

- Citations and provenance: require references for summaries and analyses.

- Schema locks: demand JSON outputs that validate before proceeding.

Constraint block (append to prompts): - Do not output secrets or credentials. - Cite all claims with provided sources only. - Output must validate against the provided JSON Schema. - If unsure, say "insufficient context" and ask for the missing field(s).

Patterns You Can Reuse

Rewrite with Constraints

Rewrite the following to a concise summary (120–150 words) for product executives. Constraints: no marketing fluff; include 2 risks; end with a next-step. Input: <paste text> Output: plain text only.

Code Generation with Tests

Role: Senior TypeScript engineer. Task: Implement a pure function formatCurrency(amt,curr) and a Jest test file. Constraints: no external libs; support INR, USD, EUR; handle negatives. Output: two files in one response, delimited by filenames.

Schema-Bound Answers

Return JSON matching:

{ "title": "string", "risks": ["string"], "confidence": 0-1 }

If uncertain, set "confidence" <= 0.4 and list missing inputs in "risks".

Tooling and Team Workflow

Treat prompts like code. Version them, test them, and review them. Small habits compound:

- Keep prompts in source control with ownership and history.

- Create prompt libraries and templates per team (dev, design, data).

- Add unit tests for schema validity and red-team cases.

- Use environment variables and tools for secret handling.

- Define acceptance criteria for outputs and automate checks.

Career Outlook

The dedicated role of “Prompt Engineer” may narrow as platform capabilities improve, but the competence will embed itself everywhere. Teams will still need specialists to build robust chains, integrate tools, enforce policy, and measure quality — much like DevOps didn’t eliminate software engineers; it raised the bar for everyone.

“The best prompts aren’t long — they’re precise. They give context, constraints, and goals, while leaving room for AI creativity.”

In practice, that means learning to craft prompts that shape AI output to fit team standards, security needs, and project goals — and doing it repeatably.

Conclusion

Prompt engineering is less a job title than a professional literacy. It sits at the intersection of clarity, structure, and systems thinking. As AI becomes a standard part of every stack, fluency in prompting will look like fluency in version control or code review: assumed.

Learn to specify context. Learn to define constraints. Learn to ask for outputs you can test, validate, and ship. The models will keep evolving; the craft of precise instruction will remain.

Titles will change. The skill is here to stay.